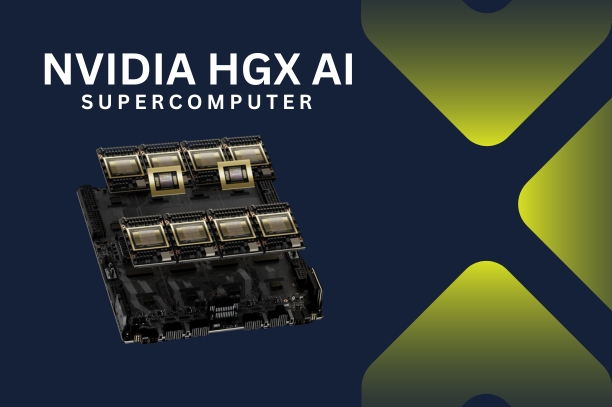

Datacenter

GPU clusters designed for deep learning

Boost your IT with our NVIDIA GPU-powered servers - ideal for high-performance computing, AI tasks, and advanced data processing!

Workstation

GPU clusters designed for deep learning

Unlock your professional potential with our cutting-edge Workstation PCs and laptops. Power your productivity with Exeton Workstations!

Gaming

GPU clusters designed for deep learning

Level up your gaming experience with our high-performance PCs, laptops, and GPUs. Dominate the virtual world with Exeton Gaming!

Accessories

GPU clusters designed for deep learning

Elevate your setup with top-tier Workstation and Gaming accessories!